It was great to attend the IATEFL Conference last week after a few years away. In particular, after what feels like years of working online, it was just so nice to meet people in person again and to give a talk to a real audience.

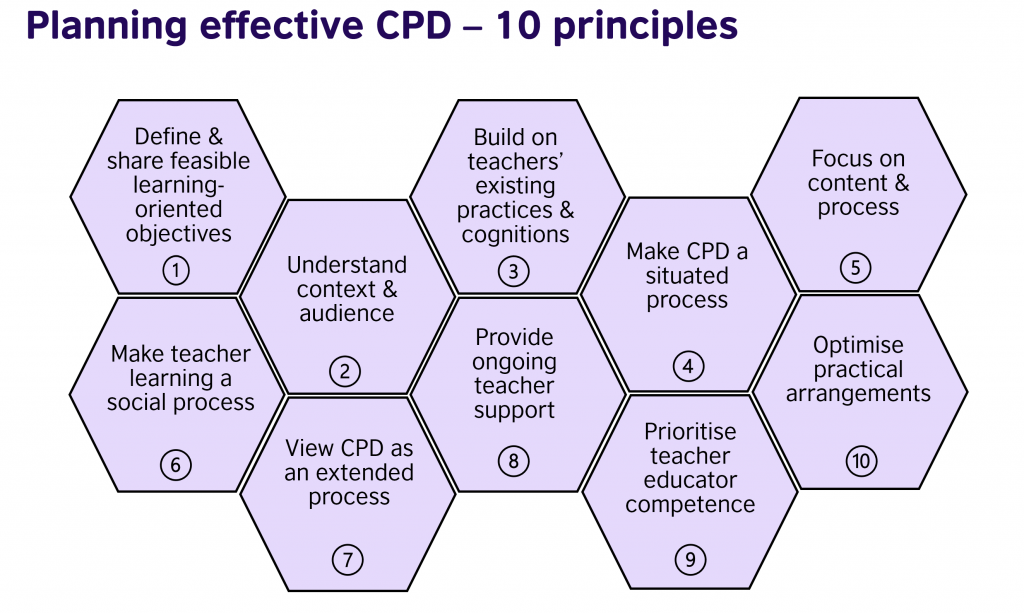

In the talk, I discussed a number of factors which – based on my experience as an evaluator in the last 10 years – make a difference to the effectiveness of organised professional development programmes for English language teachers. I went back and read several evaluation reports I had written – 12 to be precise, from 12 programmes in 12 different countries involving around 30,000 teachers in total – and looked for insight into elements that enabled these programmes to achieve more (or, in contrast, which limited their impact).

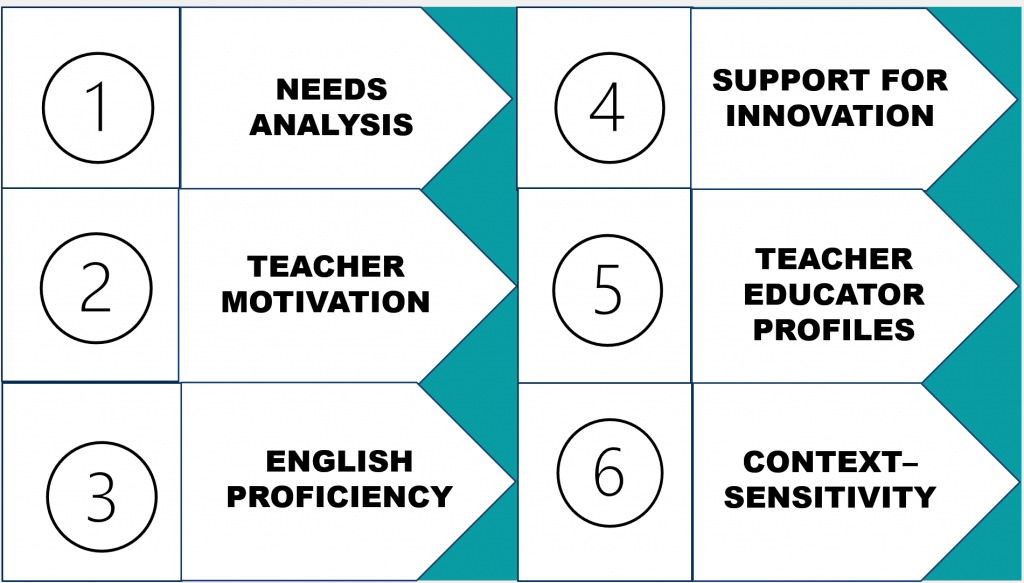

There were too many factors to cover in the 30 minutes available, so I focused on six (though on further reflection I’ve decided to replace ‘teacher autonomy’ in my original list with ‘teacher motivation’):

Overall, when a professional development programme, addresses these factors carefully, it is more likely to impact positively on teachers, including by promoting pedagogical change. The practical messages for anyone designing a programme to support the professional learning of English language teachers are as follows:

- Needs analysis informed by multiple sources of information (including, crucially, evidence of what teachers do in classrooms) allows appropriate decisions to be made about the areas of their work teachers need support with; relying on one source of information (including what teachers say they need) is a less effective approach;

- Understanding, strengthening and sustaining teacher motivation to grow professionally and to innovate in the classroom are key elements in effective professional development programme design (teacher motivation, in my view, is more important than teacher autonomy – especially when joining a programme is mandatory);

- English teachers’ levels of English proficiency vary considerably across global contexts; where they are modest (such as A2, which is often below the target set for learners), it is important for professional development to provide sustained opportunities (i.e. many hours) for teachers to improve their English and not to focus only on teaching skills in the hope that teachers’ English will develop incidentally;

- Without support for innovation as a design feature of professional development programmes, teachers will find it difficult to change, in any sustained manner, how they teach; support for change at classroom and school level is thus vital (professional development which does not impact on what teachers do is ultimately of questionable value);

- In-service teacher educators (whatever particular name they are given) play a vital role in supporting teacher professional development; on effective programmes, teacher educators meet ‘advanced’ criteria and receive preparation for their role as well as ongoing support;

- Key decisions about professional development programmes should be informed by an understanding of the socio-educational context in which the programme will take place. For example, asking teachers to work at the weekend may be acceptable in some contexts but not in others; expecting teachers to engage in collaborative reflection will not be feasible (without support) if ‘collaboration’ and ‘reflection’ are not part of teachers’ current professional practices; and whether teachers work with prescribed textbooks and assessment procedures also makes a big difference to the kinds of pedagogical change professional development can feasibly aim for.

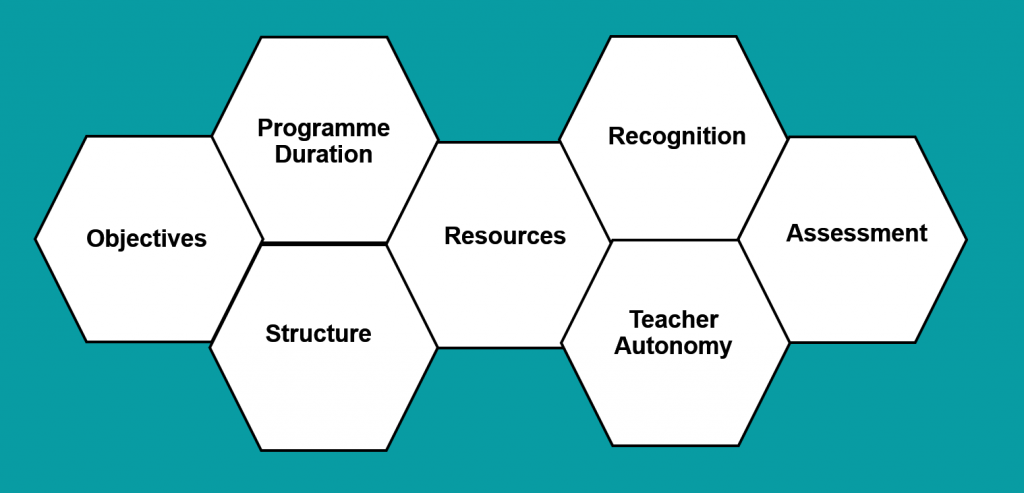

There are several other factors that should be considered when professional development programmes are being designed but which I did not discuss in the talk (see below). In some cases, their presence is always facilitative (such as appropriate objectives, or resources ) while in others appropriate choices need to be made among the options available (such as programme duration – longer programmes are not by definition always better than shorter ones). Teacher autonomy is desirable but, in my experience, not vital. And where professional development aims for systemic change (for example, in an organisation, district or even country) too much individual teacher autonomy can be counter-productive. ‘Controlled autonomy’ is a concept I find useful – space for individual choice within a broader standardized professional development framework.

Readers may also be interested in going back to my earlier related post on designing effective CPD and which the ideas presented in this year’s IATEFL talk are related to. A recent research report on in-service trainers may also be of interest.

It would be interesting to hear from readers who are involved in designing professional development programmes – does my analysis reflect your experience and are there other key factors that could be mentioned?